In this second installment, we dive into how data-driven analysis is revolutionizing key sectors — from healthcare and finance to education and climate action. These real-world examples demonstrate how organizations are leveraging data not just for insight, but for innovation, resilience, and smarter decision-making across every level.

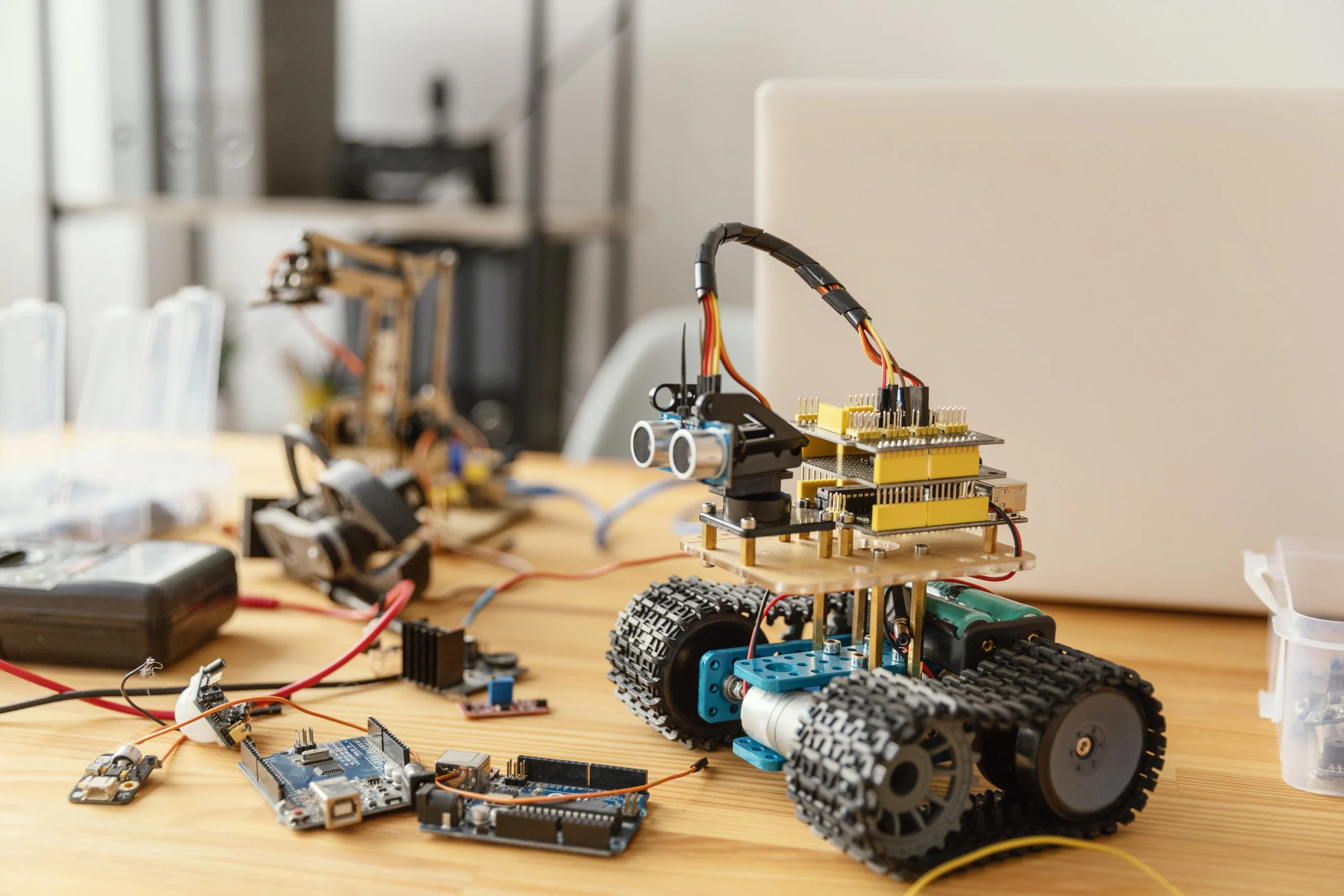

The Tools and Technologies Behind Data-Driven Analysis

Data-driven analysis relies not only on access to vast volumes of data, but also on the tools and technologies that turn raw information into actionable insights. Over the past decade, a powerful ecosystem of software, platforms, and algorithms has emerged, enabling professionals across disciplines to apply data-driven methods more effectively and at scale.

1. Data Warehousing and Data Lakes

To begin any data-driven analysis, organizations must first collect and store data. Data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake are optimized for structured data and analytical queries. Meanwhile, data lakes — like those built on Hadoop or AWS S3 — handle vast quantities of unstructured or semi-structured data such as images, video, social media, and sensor output. These storage systems are essential for enabling complex analysis that requires access to diverse data sources.

2. Business Intelligence (BI) Tools

BI tools democratize data access and allow non-technical users to analyze and visualize information. Platforms like Tableau, Power BI, and Looker offer drag-and-drop interfaces, enabling stakeholders to create dashboards, perform trend analysis, and monitor KPIs without writing a single line of code. These tools play a central role in real-time decision-making and help bridge the gap between data scientists and business teams.

3. Statistical Analysis and Data Mining Software

Tools like R, SAS, and SPSS remain staples in academic and enterprise research, allowing for advanced statistical modeling, regression analysis, hypothesis testing, and pattern discovery. They are commonly used in industries where rigorous data validation and modeling accuracy are paramount — such as pharmaceuticals, social sciences, and insurance.

4. Programming Languages for Data Science

Python and R have emerged as the leading programming languages for data science. Python, with its vast libraries like pandas, NumPy, scikit-learn, and TensorFlow, enables everything from data wrangling to machine learning and deep learning. R excels in data visualization and statistical analysis. These languages allow for the development of custom models and pipelines tailored to specific analytical needs.

5. Machine Learning and Artificial Intelligence Platforms

Cloud-based machine learning platforms like Google Vertex AI, AWS SageMaker, and Microsoft Azure ML provide powerful environments for building, training, and deploying predictive models. These tools allow organizations to identify patterns, automate decisions, and make forecasts with unprecedented accuracy. They also support natural language processing, image recognition, and other complex data tasks that traditional analytics can’t handle.

6. Data Cleaning and Preparation Tools

One of the most time-consuming parts of data-driven analysis is data preparation. Tools like Trifacta, Talend, and Alteryx help analysts clean, merge, and transform raw data into usable formats. Good analysis depends on high-quality data, and these tools ensure consistency, completeness, and reliability.

7. Real-Time Data Processing Frameworks

In industries where immediacy is critical — such as finance, cybersecurity, and logistics — real-time data analysis tools like Apache Kafka, Apache Storm, and Spark Streaming provide continuous insights. They allow for immediate reaction to changes in market conditions, threat detection, or operational bottlenecks.

8. Cloud Computing Infrastructure

The scalability and flexibility of cloud platforms — like AWS, Google Cloud, and Microsoft Azure — have made data-driven analysis more accessible than ever. Organizations no longer need to invest in expensive on-premise infrastructure. Instead, they can store petabytes of data and run complex computations on demand, scaling resources up or down based on current needs.

9. Data Governance and Security Tools

As data grows in volume and sensitivity, data governance becomes crucial. Tools like Collibra, Informatica, and OneTrust help organizations manage data access, ensure compliance with privacy laws, and maintain data integrity. These platforms are especially important for industries regulated by GDPR, HIPAA, or other data protection frameworks.

10. Collaboration and Version Control Systems

Platforms like GitHub, JupyterHub, and Databricks facilitate collaborative analysis. Data scientists can work together on models, track experiments, and share results, fostering a culture of transparency and continuous improvement.

Together, these tools form a robust technology stack that supports the entire data analysis lifecycle: from acquisition and storage to analysis and deployment. As these technologies evolve, they are becoming more integrated, more user-friendly, and more capable of handling the complexity of modern data environments.

But choosing the right tools isn’t just a technical decision — it’s strategic. It requires aligning with the organization’s goals, capabilities, and compliance needs. The best tools are those that not only process data efficiently, but also empower people to ask better questions and make smarter decisions.

Challenges and Ethical Considerations

While data-driven analysis unlocks immense potential, it also presents a host of challenges — technical, organizational, and ethical. As organizations and governments become more reliant on data for decision-making, the need to address these obstacles with foresight and integrity becomes increasingly urgent.

1. Data Quality and Accuracy

A data-driven decision is only as good as the data it relies on. Incomplete, inconsistent, outdated, or inaccurate data can lead to flawed insights. Common issues include missing values, duplicated entries, and poor data labeling. Organizations must invest in data cleaning processes, implement validation checks, and establish data stewardship roles to maintain high data quality standards.

2. Data Silos and Fragmentation

In many organizations, data is stored across multiple departments or platforms with little interoperability. This fragmentation hampers holistic analysis and leads to inefficient workflows. Overcoming silos requires strong data integration strategies, data catalogs, and a unified data infrastructure that supports cross-functional access without compromising security.

3. Bias in Data and Algorithms

Data is not neutral. Historical biases, underrepresentation of certain groups, and flawed sampling can all distort the outcomes of data analysis. When machine learning models are trained on biased data, they risk perpetuating or even amplifying existing social inequalities. This has been observed in areas such as hiring algorithms, credit scoring, and facial recognition systems. To mitigate this, analysts must audit datasets, test models for fairness, and apply techniques like de-biasing or adversarial training.

4. Privacy and Consent

The collection and use of personal data raise serious ethical and legal questions. Regulations like GDPR and CCPA now require explicit user consent, data minimization, and the right to be forgotten. Data-driven organizations must adopt privacy-by-design principles, anonymize sensitive data when possible, and ensure that individuals retain control over their information.

5. Security Risks and Data Breaches

Storing and analyzing large volumes of sensitive data makes organizations attractive targets for cyberattacks. Breaches not only expose personal information but also erode trust and result in severe financial and reputational damage. Implementing robust cybersecurity practices, including encryption, access controls, and real-time monitoring, is essential to safeguard data assets.

6. Misinterpretation and Overreliance on Data

Numbers can be misleading if interpreted without context. A correlation might be mistaken for causation, or an outlier might skew the analysis. Worse still, decision-makers may develop an overreliance on data, ignoring qualitative factors or frontline insights. Data should complement — not replace — human judgment. Critical thinking and domain expertise remain essential.

7. Ethical Dilemmas in Predictive Analytics

Predictive models can help organizations anticipate outcomes and intervene early — such as identifying students at risk of dropping out or patients likely to need hospitalization. However, such predictions can lead to profiling, discrimination, or self-fulfilling prophecies if used irresponsibly. Ethical frameworks must guide how predictions are acted upon, ensuring they are used to empower, not penalize.

8. Algorithmic Transparency and Accountability

Many advanced analytics systems — especially those based on deep learning — operate as black boxes. Their decision-making processes are opaque, making it hard to explain or justify outcomes. This lack of transparency can be problematic in high-stakes domains like criminal justice or finance. Explainable AI (XAI) and model interpretability techniques are therefore gaining importance, especially in regulated industries.

9. Talent and Skill Gaps

Despite growing interest in data analytics, there remains a shortage of skilled professionals who can extract, interpret, and communicate insights effectively. This gap is most pronounced in developing regions and public sector organizations. Addressing it requires investment in education, training, and interdisciplinary collaboration.

10. Governance and Strategic Alignment

For data-driven initiatives to succeed, organizations must embed analytics into their broader strategy. This includes establishing clear governance frameworks, aligning data initiatives with business objectives, and fostering a culture that values evidence-based decision-making. Without strong leadership and alignment, data initiatives risk becoming isolated projects with limited impact.

Addressing these challenges requires a proactive, cross-functional approach that combines technology with ethics, policy, and education. It’s not enough to collect data — organizations must use it responsibly, inclusively, and transparently. By doing so, they can unlock data’s full value while preserving public trust and social equity.